Hung Ngo ’26, computer science and engineering, learned so much during his first year at Bucknell. He became proficient in Swift programming language, learned about augmented reality kits to build AR-powered apps and became educated about the topics of machine learning and deep learning.

“I learned a lot during my first year,” says Ngo. “I am humbled. It’s amazing what I’ve been able to master during just one year in the Computer Science Department at Bucknell.”

Ngo is putting some of what he learned in his first year toward a good cause, inspired by watching his 75-year-old grandmother struggle to use a keyboard on a mobile device due to her lack of dexterity.

“I watch my grandmother try to use an iPhone, and it is challenging for her,” says Ngo. “I want to help her and others who have trouble, whether it is someone who is disabled or someone who can benefit from more accessible options as they use their devices.”

This summer, Ngo is working with Prof. Rajesh Kumar on research for a touchless typing application. While there are more than 100 Bucknell engineering students performing research on campus this summer, Ngo was able to navigate a challenging process that allowed him to perform his research at his home in Vietnam, and he is making great progress.

Ngo is coding for an application that will track the eyes of a user to allow for virtual hands-free typing, using existing Apple ARKit technology. Apple ARKit combines device motion tracking, camera scene capture, advanced scene processing and display conveniences to simplify the task of building an AR experience.

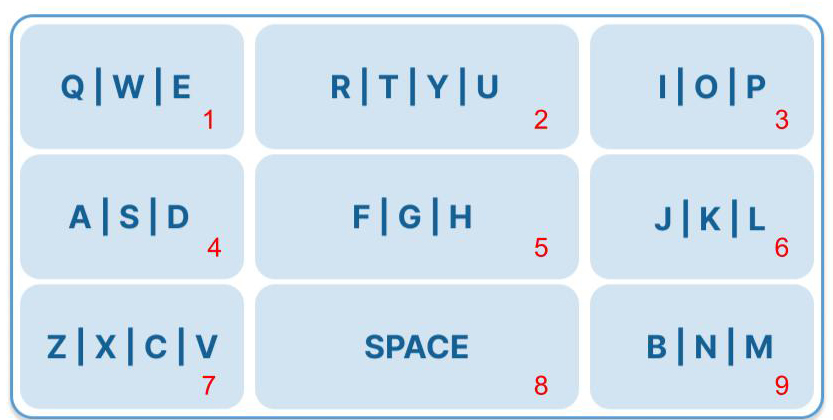

To do this, Ngo divided the keyboard into nine groups of three or four keys each. The device can then track the user’s eyes and create a numerical output. For example, the word ‘Bucknell’ would be ‘92769166’.

The code that Ngo has written translates the number clusters that users are looking at to sentences of English words. Through Seq2Seq machine learning architecture that Google Translate uses, Ngo plans to be able to accurately list the word users would like to type just through their eye movements around the virtual keyboard.

Ngo shared that his biggest challenge so far has been collecting the appropriate amount of data to test and model the project. He has already lined up computer science classmates to help him test and provide additional data this fall, but is looking for more.

The ultimate goal for Ngo is to publish a paper about his research. He is already preparing to present the research at a conference this September, and he hopes he might be able to connect with someone who can share the functionality more broadly. Particularly with people like his grandmother, who will surely benefit from all that Ngo has learned in such a short time.